Autonomous Driving

Sensor Fusion

We deliver Machine Learning Modules/Functions on all Sensor types embedded with real-time performance.

Our unique fusion of different sensors, such as laser, radar and camera, allows you to perform various inspections and surveillance missions in real time. In combination with our AI applications you have the possibility to assistence your perception automatically and evaluate the results of the required specifications directly. We give you the opportunity to quickly identify hazards and problems and not only speed up your work.

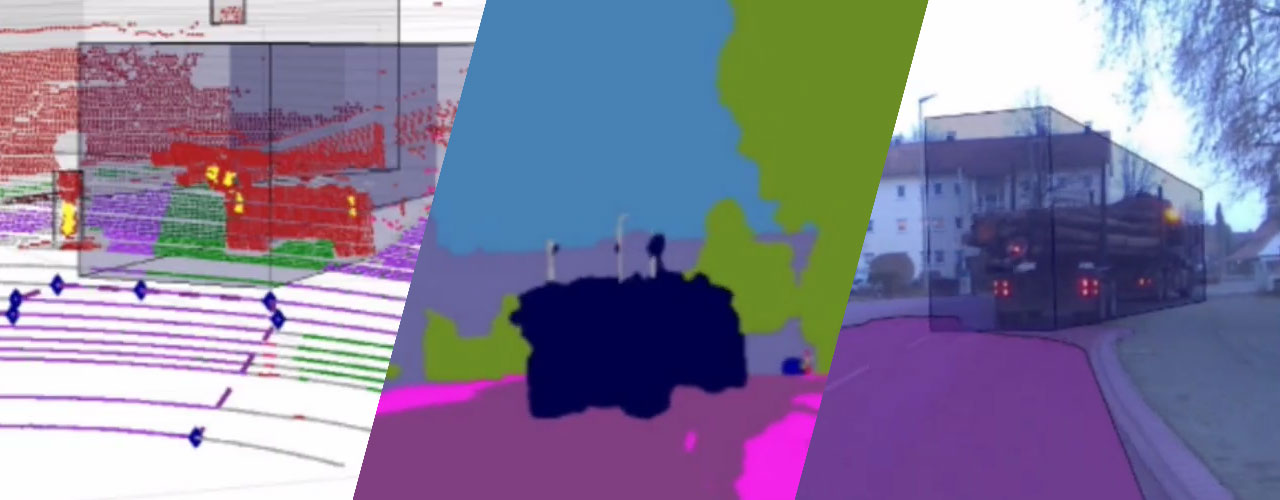

Safe Collision Avoidance

For safe autonomous mobility you need an understanding of your environment to react to it.

This is a perception driving demo answer the following questions: What does the vehcile see?. 1. What does the camera see? 2. What does Sensor-Fusion see? 3. What AI sees! Toggle between 360 camera (3D), Sensor Fusion (Camera + Lidar) and AI based Semantic Segmentation. The test drive measures a ordinary traffic scene with different corner cases. Our system guarantees sensor alignment in each direction for a complete perception for safe collision avoidance, 2D, 3D boxes, on all sensors and a panoptical panoptical semantization of the environment.

Visual & Lidar SLAM

With AI SLAM you get high Machine Learning Performance & Safety for various kinds of autonomy functions.

Our AI based Lidar and Visual SLAM semantically reasons every 3D point around the vehicle. This leads to a complete interpretable 3D map for full autonomy. A 3D interpretation leads to full process automation like automatic infrastructure analysis (buildings, streets, rails) or agriculture use cases like e.g. tree counting.

3D Mapping

Get high-precision 3D Maps with our Lidar SLAM. Our Maps are faster and smarter.

With our AI Lidar SLAM (Simultaenous Localization and Mapping) you create centimeter accurate 3D maps of a building, a construction site, an excavation site, a tunnel, a production line, a road, a field, etc. in real time. The data processing on your driving vehicle allows you to react quickly and specifically to short-term changes. With scalable AI, we reduce the number of labelled data through domain adaption and thus reduce your costs to a fraction of those of previous methods. Additionally the 3D SLAM makes your driving vehicle more independent and GPS communication, because it allows to locate itself.

Decision Making

We climb up the ladder of automation towards levels 3, 4 and 5.

These complex systems require human precision decisions (real time) for perception, planning and automation. To replicate these structures, highly effective algorithms for deep learning and a data strategy for their education are required. For this purpose we use self-monitored learning (or self-monitoring) is a relatively new learning technique (in machine learning) where the training data is autonomously (or automatically) labelled.

Full autonomous architecture

Safety by Design enables the coverage of critical assurance levels.

We designed a full autonomous architecture for SAFE AI. Which based on Modularity and Sensor Redundancy. That makes it possible for all of us test all modules in separate. This modularity guarantees redundant Sensor Paths. So we are able to fullfill the standards of the SOTIF. In concrete terms, this means that, for the first time, highly secure real-time systems for critical applications with integrated AI can be approved in series on the market.